Difference between revisions of "Linux network teaming"

Tguruswamy (talk | contribs) |

Tguruswamy (talk | contribs) |

||

| (8 intermediate revisions by the same user not shown) | |||

| Line 14: | Line 14: | ||

The bonding mode decides how each stream is handled. Using the teamd terminology (see <code>runner.name</code>): | The bonding mode decides how each stream is handled. Using the teamd terminology (see <code>runner.name</code>): | ||

; broadcast : every packet of every stream is sent over all bonded interfaces. | ; broadcast : every packet of every stream is sent over all bonded interfaces (duplicated). | ||

: aggregate bandwidth = single link bandwidth. single-stream bandwidth = single link bandwidth. | : aggregate bandwidth = single link bandwidth. single-stream bandwidth = single link bandwidth. | ||

; roundrobin : the packets of every stream are cycled between interfaces in order. Packet 1 -> interface 1, Packet 2 -> interface 2, Packet 3 -> interface 1, etc. | ; roundrobin : the packets of every stream are cycled between interfaces in order. Packet 1 -> interface 1, Packet 2 -> interface 2, Packet 3 -> interface 1, etc. | ||

| Line 34: | Line 34: | ||

There are only two ways to get more transmission bandwidth over the bonded link than a single link (10 Gb): | There are only two ways to get more transmission bandwidth over the bonded link than a single link (10 Gb): | ||

* Use '''roundrobin''' mode. No application support required. Packets | * Use '''roundrobin''' mode. No application support or cooperation required. Packets can arrive out-of-order, which may increase CPU load on the receiver or cause retransmissions -- leading to the usable bandwidth being perhaps 150% of a single link. | ||

* Use '''loadbalance mode and multiple streams which hash to different interfaces'''. Requires application support to generate multiple streams and an appropriate stream definition/hashing algorithm chosen. For example, if Layer 4 hashing is chosen, the application can transmit on two separate ports which hash to interface 1 and 2. The receiving application then must reconstruct the data into a single data stream, if required. | * Use '''loadbalance mode and multiple streams which hash to different interfaces'''. Requires application support to generate multiple streams and an appropriate stream definition/hashing algorithm chosen. For example, if Layer 4 hashing is chosen, the application can transmit on two separate TCP ports which hash to interface 1 and 2. The receiving application then must reconstruct the data into a single data stream, if required. | ||

Another option is to avoid network teaming entirely, and configure each interface with separate IPs | Another option is to avoid network teaming entirely, and configure each interface with separate IPs, guaranteeing both transmissions can happen in parallel. | ||

==Testing== | ==Testing== | ||

<code>iperf3</code> is a common tool used to test network bandwidth between two points. Run <code>iperf3 -s</code> on one computer, and <code>iperf3 -c {hostname}</code> on another. By default, iperf3 will only create a single network stream. Adding the <code>--parallel {n}</code> flag will cause the iperf3 client to use multiple ports to connect to the server and so, if ''loadbalance+Layer 4'' hashing is chosen on the bonded interfaces, allow for multi-stream testing with respect to the bonded network interfaces. Note this still runs iperf3 within a single thread and on a single CPU core. | <code>iperf3</code> is a common tool used to test network bandwidth between two points. Run <code>iperf3 -s</code> on one computer, and <code>iperf3 -c {hostname}</code> on another. By default, iperf3 will only create a single network stream. Adding the <code>--parallel {n}</code> flag will cause the iperf3 client to use multiple TCP/UDP ports to connect to the server and so, if ''loadbalance+Layer 4'' hashing is chosen on the bonded interfaces, allow for multi-stream testing with respect to the bonded network interfaces. Note this still runs iperf3 within a single thread and on a single CPU core. | ||

Alternatively, start up entirely separate iperf3 server instances, listening on different ports, and run several clients in parallel. This can allow the use of multiple network streams (assuming Layer 4 hashing) and CPU cores. | Alternatively, start up entirely separate iperf3 server instances, listening on different ports, and run several clients in parallel. This can allow the use of multiple network streams (assuming Layer 4 hashing) and CPU cores. | ||

Latest revision as of 17:01, 11 May 2023

Introduction

Network teaming, or bonding, involves combining multiple physical network interfaces (NICs) into one virtual interface for increased reliability or throughput. It is well-supported on the APS network. However, there are many possible pitfalls and areas of confusion when configuring bonded interfaces.

Streams or flows

The aggregated bandwidth of a bonded link is not the same as the bandwidth available to a single "stream" or "flow" of network packets. What defines a single stream? Here are the usual possibilities (see the runner.tx_hash setting in Linux teamd):

- Layer 2 only: Any network packet with the same source and destination MAC is part of the same "stream".

- Layer 3: Any network packet with the same source and destination IP is part of the same "stream".

- Layer 4: Any network packet with the same source and destination IP and source and destination TCP/UDP port is part of the same "stream".

Depending on how streams are distributed among the available interfaces, the aggregated transmission bandwidth for all streams may be the same as, or different to, the transmission bandwidth available for a single stream.

Bonding modes

The bonding mode decides how each stream is handled. Using the teamd terminology (see runner.name):

- broadcast

- every packet of every stream is sent over all bonded interfaces (duplicated).

- aggregate bandwidth = single link bandwidth. single-stream bandwidth = single link bandwidth.

- roundrobin

- the packets of every stream are cycled between interfaces in order. Packet 1 -> interface 1, Packet 2 -> interface 2, Packet 3 -> interface 1, etc.

- aggregate bandwidth = sum of all links. single-stream bandwidth = a bit less than the sum of all links (60-80% perhaps), because packets will sometimes arrive out-of-order.

- activebackup

- the packets of every stream are sent over the designated "active" connection, if that fails they are sent over the next backup.

- aggregate bandwidth = single link bandwidth. single-stream bandwidth = single link bandwidth.

- loadbalance

- the transmitting computer hashes the stream info (Layer 2, 3, or 4 described above) modulo the number of interfaces, and uses that to assign it to an interface. For example if loadbalance+Layer 2 is selected, any packet which satisfies

hash(src MAC, dest MAC) == 1will be sent over interface 1. - aggregate bandwidth = sum of all links. single stream bandwidth = single link bandwidth, because a single stream will always hash to transmit over the same interface.

- 802.3ad / LACP

- allows the transmitter to automatically notify the switch/gateway that the physical ports are bonded. After that all of the behavior is the same as loadbalance above.

- aggregate bandwidth = sum of all links. single stream bandwidth = single link bandwidth, as for loadbalance.

Examples

Maximum throughput (one-to-one)

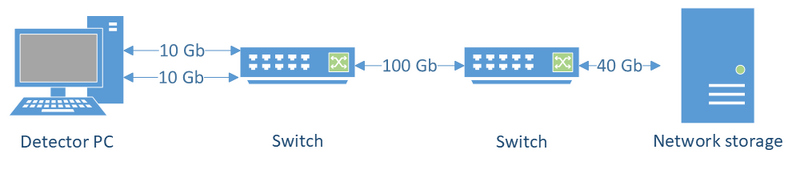

Consider a simple configuration where we are trying to get maximum throughput from a single detector PC to network storage. The connection from the detector PC is the bottleneck so we add an extra interface card. How should we configure the two available interfaces?

There are only two ways to get more transmission bandwidth over the bonded link than a single link (10 Gb):

- Use roundrobin mode. No application support or cooperation required. Packets can arrive out-of-order, which may increase CPU load on the receiver or cause retransmissions -- leading to the usable bandwidth being perhaps 150% of a single link.

- Use loadbalance mode and multiple streams which hash to different interfaces. Requires application support to generate multiple streams and an appropriate stream definition/hashing algorithm chosen. For example, if Layer 4 hashing is chosen, the application can transmit on two separate TCP ports which hash to interface 1 and 2. The receiving application then must reconstruct the data into a single data stream, if required.

Another option is to avoid network teaming entirely, and configure each interface with separate IPs, guaranteeing both transmissions can happen in parallel.

Testing

iperf3 is a common tool used to test network bandwidth between two points. Run iperf3 -s on one computer, and iperf3 -c {hostname} on another. By default, iperf3 will only create a single network stream. Adding the --parallel {n} flag will cause the iperf3 client to use multiple TCP/UDP ports to connect to the server and so, if loadbalance+Layer 4 hashing is chosen on the bonded interfaces, allow for multi-stream testing with respect to the bonded network interfaces. Note this still runs iperf3 within a single thread and on a single CPU core.

Alternatively, start up entirely separate iperf3 server instances, listening on different ports, and run several clients in parallel. This can allow the use of multiple network streams (assuming Layer 4 hashing) and CPU cores.