Difference between revisions of "RRM 3-14 Concepts"

(Promote section on link MS flags by 1 level) |

|||

| (50 intermediate revisions by 4 users not shown) | |||

| Line 7: | Line 7: | ||

This chapter covers the general functionality that is found in all database records. The topics covered are I/O scanning, I/O address specification, data conversions, alarms, database monitoring, and continuous control: | This chapter covers the general functionality that is found in all database records. The topics covered are I/O scanning, I/O address specification, data conversions, alarms, database monitoring, and continuous control: | ||

* [[#Scanning Specification|''Scanning Specification'']] describes the various conditions under which a record is processed. | * [[#Scanning Specification|''Scanning Specification'']] describes the various conditions under which a record is processed. | ||

* [[#Address Specification|''Address Specification'']] explains the source of inputs and the destination of outputs. | * [[#Address Specification|''Address Specification'']] explains the source of inputs and the destination of outputs. | ||

| Line 16: | Line 15: | ||

These concepts are essential in order to understand how the database interfaces with the process. | These concepts are essential in order to understand how the database interfaces with the process. | ||

The EPICS databases can be created using visual tools (VDCT, CapFast) or by manual creation of a database "myDatabase.db" text file. Visual Database Configuration Tool (VDCT), a java application from Cosylab, is a more modern tool for database creation/editing that runs on Linux, Windows, and Sun. | |||

= Scanning Specification = | = Scanning Specification = | ||

| Line 29: | Line 30: | ||

== Periodic Scanning == | == Periodic Scanning == | ||

The following periods for scanning database records are available, though EPICS can be configured to recognize more scan periods: | The periodic scan tasks run as close to the frequency specified as possible. When each periodic scan task starts, it calls the gettime routine, then processes all of the records on this period. After the processing, gettime is called again and this thread sleeps the difference between the scan period and the time to process the records. If the 1 second scan records take 100 milliseconds to process, then the 1 second scan period will start again 900 milliseconds after completion. The following periods for scanning database records are available, though EPICS can be configured to recognize more scan periods: | ||

* <code> 10 second</code> | * <code> 10 second</code> | ||

* <code> 5 second</code> | * <code> 5 second</code> | ||

| Line 44: | Line 44: | ||

Here is an example of a <code>menuScan.dbd</code> file, which has the default menu choices for all periods listed above as well as choices for event scanning, passive scanning, and I/O interrupt scanning: | Here is an example of a <code>menuScan.dbd</code> file, which has the default menu choices for all periods listed above as well as choices for event scanning, passive scanning, and I/O interrupt scanning: | ||

menu(menuScan) { | menu(menuScan) { | ||

choice(menuScanPassive,"Passive") | choice(menuScanPassive,"Passive") | ||

| Line 58: | Line 57: | ||

} | } | ||

The first three choices must appear first and in the order shown. The remaining definitions are for the periodic scan rates, which must appear in the order slowest to fastest (the order directly controls the thread priority assigned to the particular scan rate, and faster scan rates should be assigned higher thread priorities). At IOC initialization, the menu choice strings are read at scan initialization. The number of periodic scan rates and the period of each rate is determined from the menu choice strings. Thus periodic scan rates can be changed by changing menuScan.dbd and loading this version via dbLoadDatabase. The only requirement is that each periodic choice string must begin with a numeric value specified in units of seconds.For example, to add a choice for 0.015 seconds, add the following line after the 0.1 second choice: | |||

choice(menuScan_015_second, " .015 second") | |||

The range of values for scan periods can be from one clock tick. (vxWorks out of the box supports 0.015 seconds or a maximum period of 60 Hz), to the maximum number of ticks available on the system. Note, however, that the order of the choices is essential. The first three choices must appear in the above order. Then the remaining choices should follow in descending order, the biggest time period first and the smallest last. | |||

The range of values for scan periods can be from one clock tick | |||

== Event Scanning == | == Event Scanning == | ||

| Line 70: | Line 68: | ||

=== I/O Interrupt Events === | === I/O Interrupt Events === | ||

Scanning on I/O interrupt causes a record to be processed when | Scanning on I/O interrupt causes a record to be processed when a driver posts an I/O Event. In many cases these events are posted in the interrupt service routine. For example, if an analog input record gets its value from a Xycom 566 Differential Latched card and it specifies I/O interrupt as its scanning routine, then the record will be processed each time the card generates an interrupt (not all types of I/O cards can generate interrupts). Note that even though some cards cannot actually generate interrupts, some driver support modules can simulate interrupts. In order for a record to scan on I/O interrupts, its SCAN field must specify <code>I/O Intr</code>. | ||

=== User-defined Events === | === User-defined Events === | ||

| Line 79: | Line 77: | ||

== Passive Scanning == | == Passive Scanning == | ||

Passive records are processed when the are referenced by other records through their link fields or when a channel access put is done to them. | |||

=== Channel Access Puts to Passive Scanned Records === | |||

In this case where a channel access put is done to a record, the field being written has an attribute that determines if this put causes record processing. In the case of all records, putting to the VAL field causes record processing. Consider a binary output that has a SCAN of Passive. If an operator display has a button on the VAL field, every time the button is pressed, a channel access put is sent to the record. When the VAL field is written, the Passive record is processed and the specified device support is called to write the newly converted RVAL to the device specified in the OUT field through the device support specified by DTYP. Fields determined to change the way a record behaves, typical cause the record to process. Another field that would cause the binary output to process would be the ZSV; which is the alarm severity if the binary output record is in state Zero (0). If the record was in state 0 and the severity of being in that state changed from No Alarm to Minor Alarm, the only way to catch this on a SCAN Passive record is to process it. Fields are configured to cause binary output records to process in the bo.dbd file. The ZSV severity is configured as follows: | |||

: field(ZSV,DBF_MENU) {<br> | |||

:: prompt("Zero Error Severity")<br> | |||

:: promptgroup(GUI_ALARMS)<br> | |||

:: pp(TRUE)<br> | |||

:: interest(1)<br> | |||

:: menu(menuAlarmSevr)<br> | |||

: }<br> | |||

where the line "pp(TRUE)" is the indication that this record is processed when a channel access put is done. | |||

=== Database Links to Passive Record === | |||

The records in the process database use link fields to configure data passing and scheduling (or processing). These fields are either INLINK, OUTLINK, or FWDLINK fields. | |||

==== Forward Links ==== | |||

In the database definition file (.dbd) these fields are defined as follows:<br> | |||

: field(FLNK,DBF_FWDLINK) {<br> | |||

:: prompt("Forward Process Link")<br> | |||

:: promptgroup(GUI_LINKS)<br> | |||

:: interest(1)<br> | |||

: }<br> | |||

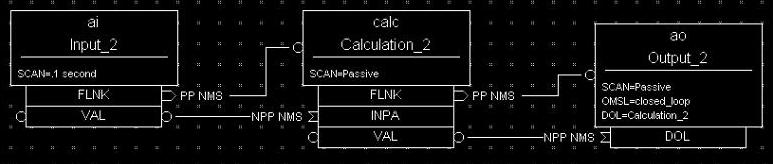

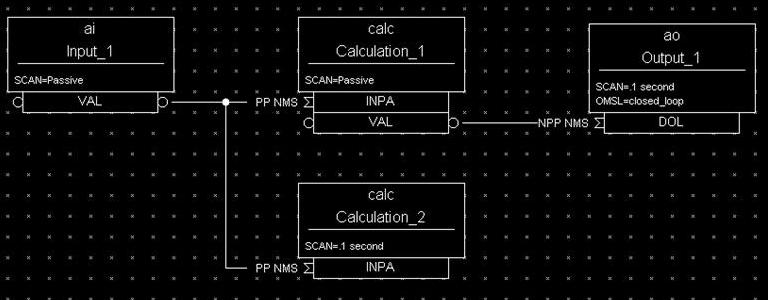

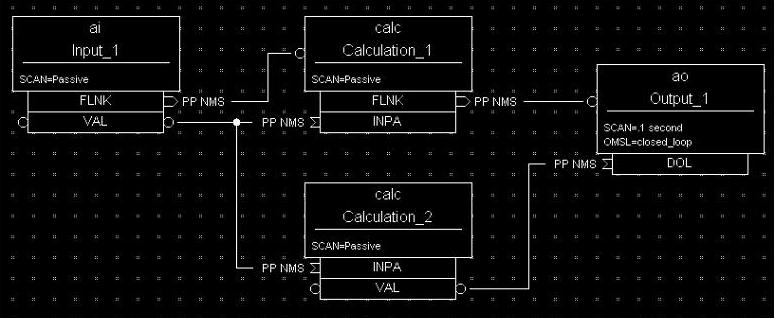

If the record that is referenced by the FLNK field has a SCAN field of Passive, then the record is processed after the record with the FLNK. The FLNK field only causes record processing, no data is passed. In ([[#Figure 1|''Figure 1'']]), three records are shown. The ai record "Input_2" is processed periodically. At each interval, Input_2 is processed. After Input_2 has read the new input, converted it to engineering units, checked the alarm condition, and posted monitors to Channel Access, then the calc record "Calculation_2" is processed. Calculation_2 reads the input, performs the calculation, checked the alarm condition, and posted monitors to Channel Access, then the ao record "Output_2" is processed. Output_2 reads the desired output, rate limits it, clamps the range, calls the device support for the OUT field, checks alarms, posts monitors and then is complete. | |||

<H5 ID="Figure_1">Figure 1</H5> | <H5 ID="Figure_1">Figure 1</H5> | ||

[[Image: | [[Image:RecordProcessingFLNK.jpg|Figure 1]] | ||

==== Input Links ==== | |||

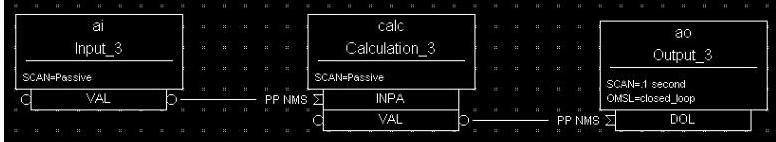

Input links normally fetch data from one field into a field in the referring record. For instance, if the INPA field of a CALC record is set to Input_3.VAL, then the VAL field is fetched from the Input_3 record and placed in the A field of the CALC record. These data links have an attribute that specify if a passive record should be processed before the value is returned. The default for this attribute is NPP (no process passive). In this case, the record takes the VAL field and returns it. If they are set to PP (process passive), then the record is processed before the field is returned. In [[#Figure 2|''Figure 2'']]), the PP attribute is used. In this example, Output_3 is processed periodically. Record processing first fetching the DOL field. As the DOL field has the PP attribute set, before the VAL field of Calc_3 is returned, the record is processed. The first thing done by the ai record Input_3 does is to read the input. It then converts the RVAL field to engineering units and places this in the VAL field, checks alarms, posts monitors, and then returns. The calc record then fetches the VAL field field from Input_3, places it in the A field, computes the calculation, checks alarms, posts monitors, the returns. The ao record, Output_3, then fetches the VAL field from the CALC record, applies rate of change and limits, write the new value, checks alarms, posts monitors and completes. | |||

<H5 ID="Figure_2">Figure 2</H5> | |||

[[Image:RecordProcessing1PP.jpg|Figure 2. Process Passive Link Attribute]] | |||

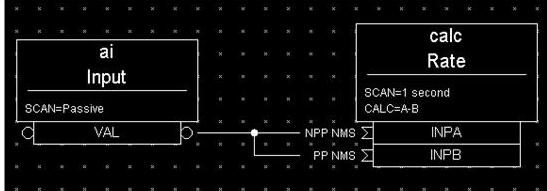

In [[#Figure 3|''Figure 3'']]), the PP/NPP attribute is used to calculate a rate of change. At 1 Hz, the calculation record is processed. It fetches the inputs for the calc record in order. As INPA has an attribute of NPP, the VAL field is taken from the ai record. Before INPB takes the VAL field from the ai record it is processed, as the attribute on this link is PP. The new ai value is placed in the B field of the calc record. A-B is the VAL field of the ai one second ago and the current VAL field. | |||

<H5 ID="Figure_3">Figure 3</H5> | |||

[[Image:RecordProcessingPPExample.jpg|Figure 3]] | |||

==== Process Chains ==== | |||

Links can be used to create complex scanning logic. In the forward link example above, the chain of records is determined by the scan rate of the input record. In the PP example, the scan rate of the chain is determined by the rate of the output. Either of these may be appropriate depending on the hardware and process limitations. | |||

Care must be taken as this flexibility can also lead to some incorrect configurations. In these next examples we look at some mistakes that can occur. | |||

<H5 ID=" | In [[#Figure 4|''Figure 4'']]) two records that are scanned at 10 Hz make references to the same Passive record. In this case, no alarm or error is generated. The Passive record is scanned twice at 10 Hz. The time between the two scans depends on what records are processed between the two periodic records. | ||

<H5 ID="Figure_4">Figure 4</H5> | |||

[[Image:ScanTwice.jpg|Figure 4]] | |||

[[ | In [[#Figure 5|''Figure 5'']]), several circular references are made. As the record processing is recursively called for links, the record containing the link is marked as active during the entire time that the chain is being processed. When one of these circular references is encountered, the active flag is recognized and the request to process the record is ignored. | ||

<H5 ID="Figure_5">Figure 5</H5> | |||

[[Image:PACT.jpg|Figure 5]] | |||

=== Channel Access Links === | |||

A Channel Access link is an input link or output link that specifies a link to a record located in another IOC or an input and output link with one of the following attributes: CA, CP, or CPP. | |||

==== Channel Access Input Links ==== | |||

If the input link specifies CA, CP, or CPP, regardless of the location of the process variable being referenced, it will be forced to be a Channel Access link. This is helpful for separating process chains that are not tightly related. If the input link specifies CP, it also causes the record containing the input link to process whenever a monitor is posted, no matter what the record's SCAN field specifies. If the input link specifies CPP, it causes the record to be processed if and only if the record with the CPP link has a SCAN field set to Passive. In other words, CP and CPP cause the record containing the link to be processed with the process variable that they reference changes. | |||

[[ | ==== Channel Access Output Links ==== | ||

Only CA is appropriate for an output link. The write to a field over channel access causes processing as specified in [[#Channel Access Puts to Passive Scanned Records|''Channel Access Puts to Passive Scanned Records'']]. | |||

=== | ==== Channel Access Forward Links ==== | ||

Forward links can also be Channel Access links, either when they specify a record located in another IOC or when they specify the CA attributes. However, forward links will only be made Channel Access links if they specify the PROC field of another record. | |||

=== Maximize Severity Attribute === | |||

The Maximize Severity attribute is one of NMS (Non-Maximize Severity), MS (Maximize Severity), MSS (Maximize Status and Severity) or MSI (Maximize Severity if Invalid). It determines whether alarm severity is propagated across links. If the attribute is MSI only a severity of INVALID_ALARM is propagated; settings of MS or MSS propagate all alarms that are more severe than the record's current severity. For input links the alarm severity of the record referred to by the link is propagated to the record containing the link. For output links the alarm severity of the record containing the link is propagated to the record referred to by the link. If the severity is changed the associated alarm status is set to LINK_ALARM, except if the attribute is MSS when the alarm status will be copied along with the severity. | |||

The method of determining if the alarm status and severity should be changed is called ``maximize severity". In addition to its actual status and severity, each record also has a new status and severity. The new status and severity are initially 0, which means NO_ALARM. Every time a software component wants to modify the status and severity, it first checks the new severity and only makes a change if the severity it wants to set is greater than the current new severity. If it does make a change, it changes the new status and new severity, not the current status and severity. When database monitors are checked, which is normally done by a record processing routine, the current status and severity are set equal to the new values and the new values reset to zero. The end result is that the current alarm status and severity reflect the highest severity outstanding alarm. If multiple alarms of the same severity are present the alarm status reflects the first one detected. | |||

== Phase == | == Phase == | ||

| Line 142: | Line 164: | ||

It is important to understand that in the above example, no record causes another to be processed. The phase mechanism instead causes each to process in sequence. | It is important to understand that in the above example, no record causes another to be processed. The phase mechanism instead causes each to process in sequence. | ||

| Line 169: | Line 177: | ||

== Hardware Addresses == | == Hardware Addresses == | ||

The interface between EPICS process database logic and hardware drivers is indicated in two fields of records that support hardware interfaces: DTYP and INP/OUT. The DTYP field is the name of the device support entry table that is used to interface to the device. The address specification is dictated by the device support. Some conventions exist for several buses that are listed below. Lately, more devices have just opted to use a string that is then parsed by the device support as desired. This specification type is called INST I/O. The other conventions listed here include: VME, Allen-Bradley, CAMAC, GPIB, BITBUS, VXI, and RF. The input specification for each of these is different. The specification of these strings must be acquired from the device support code or document. | |||

=== INST === | |||

The INST I/O specification is a string that is parsed by the device support. The format of this string is determined by the device support. | |||

; <code>#@''parm''</code> | |||

: For INST I/O | |||

:* @ precedes optional string ''parm'' | |||

=== VME Bus === | === VME Bus === | ||

The VME address specification format differs between the various devices. In all of these specifications the '#' character designates a hardware address. The three formats are: | The VME address specification format differs between the various devices. In all of these specifications the '#' character designates a hardware address. The three formats are: | ||

; <code>#C''x'' S''y'' @''parm''</code> | ; <code>#C''x'' S''y'' @''parm''</code> | ||

: For analog in, analog out, and timer | : For analog in, analog out, and timer | ||

| Line 187: | Line 198: | ||

The Allen-Bradley address specification is a bit more complicated as it has several more fields. The '#' designates a hardware address. The format is: | The Allen-Bradley address specification is a bit more complicated as it has several more fields. The '#' designates a hardware address. The format is: | ||

; <code>#L''a'' A''b'' C''c'' S''d'' @''parm'</code> | ; <code>#L''a'' A''b'' C''c'' S''d'' @''parm'</code> | ||

: All record types | : All record types | ||

| Line 201: | Line 211: | ||

The CAMAC address specification is similar to the Allen-Bradley address specification. The '#' signifies a hardware address. The format is: | The CAMAC address specification is similar to the Allen-Bradley address specification. The '#' signifies a hardware address. The format is: | ||

; <code>#B''a'' C''b'' N''c'' A''d'' F''e'' @''parm''</code> | ; <code>#B''a'' C''b'' N''c'' A''d'' F''e'' @''parm''</code> | ||

: For waveform digitizers | : For waveform digitizers | ||

| Line 215: | Line 224: | ||

=== Others === | === Others === | ||

The GPIB | The GPIB, BITBUS, RF, and VXI card-types have been added to the supported I/O cards. A brief description of the address format for each follows. For a further explanation, see the specific documentation on each card. | ||

; <code>#L''a'' A''b'' @''parm''</code> | ; <code>#L''a'' A''b'' @''parm''</code> | ||

| Line 229: | Line 238: | ||

:* P precedes the port on node ''c'' | :* P precedes the port on node ''c'' | ||

:* S precedes the signal on port ''d'' | :* S precedes the signal on port ''d'' | ||

:* @ precedes optional string ''parm'' | :* @ precedes optional string ''parm'' | ||

| Line 251: | Line 256: | ||

Database addresses are used to specify input links, desired output links, output links, and forward processing links. The format in each case is the same: | Database addresses are used to specify input links, desired output links, output links, and forward processing links. The format in each case is the same: | ||

<RecordName>.<FieldName> | <RecordName>.<FieldName> | ||

where <code>RecordName</code> is simply the name of the record being referenced, '.' is the separator between the record name and the field name, and <code>FieldName</code> is the name of the field within the record. | |||

The record name and field name specification are case sensitive. The record name can be a mix of the following: a-z A-Z 0-9 _ - : . [ ] < > ;. The field name is always upper case. If no field name is specified as part of an address, the value field (VAL) of the record is assumed. Forward processing links do not need to include the field name because no value is returned when a forward processing link is used; therefore, a forward processing link need only specify a record name. | |||

The record name and field name specification are case sensitive. The record name | |||

Basic typecast conversions are made automatically when a value is retrieved from another record--integers are converted to floating point numbers and floating point numbers are converted to integers. For example, a calculation record which uses the value field of a binary input will get a floating point 1 or 0 to use in the calculation, because a calculation record's value fields are floating point numbers. If the value of the calculation record is used as the desired output of a multi-bit binary output, the floating point result is converted to an integer, because multi-bit binary outputs use integers. | Basic typecast conversions are made automatically when a value is retrieved from another record--integers are converted to floating point numbers and floating point numbers are converted to integers. For example, a calculation record which uses the value field of a binary input will get a floating point 1 or 0 to use in the calculation, because a calculation record's value fields are floating point numbers. If the value of the calculation record is used as the desired output of a multi-bit binary output, the floating point result is converted to an integer, because multi-bit binary outputs use integers. | ||

Records that use soft device support routines or have no hardware device support routines are called ''soft records''. See the chapter on each record for information about that record's device support. | Records that use soft device support routines or have no hardware device support routines are called ''soft records''. See the chapter on each record for information about that record's device support. | ||

== Constants == | == Constants == | ||

| Line 304: | Line 284: | ||

The most simple type of discrete conversion would be the case of a discrete input that indicates the on/off state of a device. If the level is high it indicates that the state of the device is on. Conversely, if the level is low it indicates that the device is off. In the database, parameters are available to enter strings which correspond to each level, which, in turn, correspond to a state (0,1). By defining these strings, the operator is not required to know that a specific transducer is on when the level of its transmitter is high or off when the level is low. In a typical example, the conversion parameters for a discrete input would be entered as follows: | The most simple type of discrete conversion would be the case of a discrete input that indicates the on/off state of a device. If the level is high it indicates that the state of the device is on. Conversely, if the level is low it indicates that the device is off. In the database, parameters are available to enter strings which correspond to each level, which, in turn, correspond to a state (0,1). By defining these strings, the operator is not required to know that a specific transducer is on when the level of its transmitter is high or off when the level is low. In a typical example, the conversion parameters for a discrete input would be entered as follows: | ||

; '''Zero Name (ZNAM):''' Off | |||

; '''Zero Name (ZNAM): ''' | ; '''One Name (ONAM):''' On | ||

; '''One Name (ONAM):''' | |||

The equivalent discrete output example would be an on/off controller. Let's consider a case where the safe state of a device is <code>On</code>, the zero state. The level being low drives the device on, so that a broken cable will drive the device to a safe state. In this example the database parameters are entered as follows: | The equivalent discrete output example would be an on/off controller. Let's consider a case where the safe state of a device is <code>On</code>, the zero state. The level being low drives the device on, so that a broken cable will drive the device to a safe state. In this example the database parameters are entered as follows: | ||

; '''Zero Name (ZNAM):''' On | |||

; '''Zero Name (ZNAM): ''' | ; '''One Name (ONAM):''' Off | ||

; '''One Name (ONAM): ''' | |||

By giving the outside world the device state, the information is clear. Binary inputs and binary outputs are used to represent such on/off devices. | By giving the outside world the device state, the information is clear. Binary inputs and binary outputs are used to represent such on/off devices. | ||

A more complex example involving discrete values is | A more complex example involving discrete values is a multi-bit binary output record. Consider a two state valve which has four states-- Traveling, full open, full closed, and disconnected. The bit pattern for each control state is entered into the database with the string that describes that state. The database parameters for the monitor would be entered as follows: | ||

; '''Number of Bits (NOBT): ''' 2 | |||

; '''Number of Bits (NOBT): ''' | ; '''First Input Bit Spec (INP): ''' Address of the least significant bit | ||

; '''Zero Value (ZRVL):''' 0 | |||

; '''First Input Bit Spec (INP): ''' | ; '''One Value (ONVL):''' 1 | ||

; '''Two Value (TWVL):''' 2 | |||

; '''Zero Value (ZRVL):''' | ; '''Three Value (THVL):''' 3 | ||

; '''Zero String (ZRST):''' Traveling | |||

; '''One Value (ONVL):''' | ; '''One String (ONST):''' Open | ||

; '''Two String (TWST):''' Closed | |||

; '''Two Value (TWVL):''' | ; '''Three String (THST):'''Disconnected | ||

; '''Three Value (THVL):''' | |||

; '''Zero String (ZRST):''' | |||

; '''One String (ONST):''' | |||

; '''Two String (TWST):''' | |||

; '''Three String (THST):''' | |||

In this case, when the database record is scanned, the monitor bits are read and compared with the bit patterns for each state. When the bit pattern is found, the device is set to that state. For instance, if the | In this case, when the database record is scanned, the monitor bits are read and compared with the bit patterns for each state. When the bit pattern is found, the device is set to that state. For instance, if the two monitor bits read equal <code>10</code> (2), the Two value is the corresponding value, and the device would be set to state 2 which indicates that the valve is Closed. | ||

If the bit pattern is not found, the device is in an unknown state. | If the bit pattern is not found, the device is in an unknown state. In this example all possible states are defined. | ||

In addition, the DOL fields of binary output records (bo and mbbo) will accept values in strings. When they retrieve the string or when the value field is given a string via <code>put_enum_strs</code>, a match is sought with one of the states. If a match is found, the value for that state is written. | In addition, the DOL fields of binary output records (bo and mbbo) will accept values in strings. When they retrieve the string or when the value field is given a string via <code>put_enum_strs</code>, a match is sought with one of the states. If a match is found, the value for that state is written. | ||

| Line 351: | Line 314: | ||

Analog conversions require knowledge of the transducer, the filters, and the I/O cards. Together they measure the process, transmit the data, and interface the data to the IOC. Smoothing is available to filter noisy signals. The smoothing argument is a constant between 0 and 1 and is specified in the SMOO field. It is applied to the converted hardware signal as follows: | Analog conversions require knowledge of the transducer, the filters, and the I/O cards. Together they measure the process, transmit the data, and interface the data to the IOC. Smoothing is available to filter noisy signals. The smoothing argument is a constant between 0 and 1 and is specified in the SMOO field. It is applied to the converted hardware signal as follows: | ||

eng units = (new eng units × (1 - smoothing)) + (old eng units × smoothing) | eng units = (new eng units × (1 - smoothing)) + (old eng units × smoothing) | ||

| Line 357: | Line 319: | ||

Whether an analog record performs linear conversions, breakpoint conversions, or no conversions at all depends on how the record's LINR field is configured. The possible choices for the LINR field are as follows: | Whether an analog record performs linear conversions, breakpoint conversions, or no conversions at all depends on how the record's LINR field is configured. The possible choices for the LINR field are as follows: | ||

* LINEAR | * LINEAR | ||

* NO CONVERSION | * NO CONVERSION | ||

| Line 368: | Line 329: | ||

=== Linear Conversions === | === Linear Conversions === | ||

The engineering units full scale and low scale are specified in the EGUF and EGUL fields, respectively. The values of the EGUF and EGUL fields correspond to the maximum and minimum values of the transducer, respectively. Thus, the value of these fields is device dependent. For example, if the transducer has a range of -10 to +10 volts, then the EGUF field should be 10 and the EGUL field should be -10. In all cases, the EGU field is a string that contains the text to indicate the units of the value. | |||

There are three formulas to know when considering the linear conversion parameters. The conversion from measured value to engineering units is as follows: | There are three formulas to know when considering the linear conversion parameters. The conversion from measured value to engineering units is as follows: | ||

<TABLE> | <TABLE> | ||

<TD ROWSPAN=3>eng units = eng units low + ( | <TD ROWSPAN=3>eng units = eng units low + ( | ||

| Line 381: | Line 342: | ||

</TABLE> | </TABLE> | ||

In the following examples the determination of engineering units full scale and low scale is shown. The conversion to engineering units is also shown to familiarize the reader with the signal conversions from signal source to database engineering units. | In the following examples the determination of engineering units full scale and low scale is shown. The conversion to engineering units is also shown to familiarize the reader with the signal conversions from signal source to database engineering units. | ||

==== Transducer Matches the I/O module ==== | ==== Transducer Matches the I/O module ==== | ||

First let us consider a linear conversion. In this example, the transducer transmits 0-10 Volts, there is no amplification, and the I/O card uses a 0-10 Volt interface. | First let us consider a linear conversion. In this example, the transducer transmits 0-10 Volts, there is no amplification, and the I/O card uses a 0-10 Volt interface. | ||

[[Image:RRM 3-13 Concepts d1.gif]] | [[Image:RRM 3-13 Concepts d1.gif]] | ||

The transducer transmits pressure: 0 PSI at 0 Volts and 175 PSI at 10 Volts. The engineering units full scale and low scale are determined as follows: | The transducer transmits pressure: 0 PSI at 0 Volts and 175 PSI at 10 Volts. The engineering units full scale and low scale are determined as follows: | ||

eng. units full scale = 17.5 × 10.0 | eng. units full scale = 17.5 × 10.0 | ||

eng. units low scale = 17.5 × 0.0 | eng. units low scale = 17.5 × 0.0 | ||

The field entries in an analog input record to convert this pressure will be as follows: | The field entries in an analog input record to convert this pressure will be as follows: | ||

; '''LINR:''' Linear | |||

; '''LINR:''' | ; '''EGUF:''' 175.0 | ||

; '''EGUL:''' 0 | |||

; '''EGUF:''' | ; '''EGU:''' PSI | ||

; '''EGUL:''' | |||

; '''EGU:''' | |||

The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | ||

<TABLE> | <TABLE> | ||

<TD ROWSPAN=3>eng units = 0 + ( | <TD ROWSPAN=3>eng units = 0 + ( | ||

| Line 428: | Line 376: | ||

Let's consider a variation of this linear conversion where the transducer is 0-5 Volts. | Let's consider a variation of this linear conversion where the transducer is 0-5 Volts. | ||

[[Image:RRM 3-13 Concepts d2.gif]] | [[Image:RRM 3-13 Concepts d2.gif]] | ||

In this example the transducer is producing 0 Volts at 0 PSI and 5 Volts at 175 PSI. The engineering units full scale and low scale are determined as follows: | In this example the transducer is producing 0 Volts at 0 PSI and 5 Volts at 175 PSI. The engineering units full scale and low scale are determined as follows: | ||

eng. units low scale = 35 × 10 | eng. units low scale = 35 × 10 | ||

eng. units full scale = 35 × 0 | eng. units full scale = 35 × 0 | ||

The field entries in an analog record to convert this pressure will be as follows: | The field entries in an analog record to convert this pressure will be as follows: | ||

; '''LINR:''' Linear | |||

; '''LINR:''' | ; '''EGUF:''' 350 | ||

; '''EGUL:''' 0 | |||

; '''EGUF:''' | ; '''EGU:''' PSI | ||

; '''EGUL:''' | |||

; '''EGU:''' | |||

The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | ||

<TABLE> | <TABLE> | ||

<TD ROWSPAN=3>eng units = 0 + ( | <TD ROWSPAN=3>eng units = 0 + ( | ||

| Line 460: | Line 400: | ||

Notice that at full scale the transducer will generate 5 Volts to represent 175 PSI. This is only half of what the input card accepts; input is 2048. Let's plug in the numbers to see the result: | Notice that at full scale the transducer will generate 5 Volts to represent 175 PSI. This is only half of what the input card accepts; input is 2048. Let's plug in the numbers to see the result: | ||

0 + (2048 / 4095) * (350 - 0) = 175 | 0 + (2048 / 4095) * (350 - 0) = 175 | ||

| Line 468: | Line 407: | ||

Let's consider another variation of this linear conversion where the input card accepts -10 Volts to 10 Volts (i.e. Bipolar instead of Unipolar). | Let's consider another variation of this linear conversion where the input card accepts -10 Volts to 10 Volts (i.e. Bipolar instead of Unipolar). | ||

[[Image:RRM 3-13 Concepts d3.gif]] | [[Image:RRM 3-13 Concepts d3.gif]] | ||

In this example the transducer is producing 0 Volts at 0 PSI and 10 Volts at 175 PSI. The input module has a different range of voltages and the engineering units full scale and low scale are determined as follows: | In this example the transducer is producing 0 Volts at 0 PSI and 10 Volts at 175 PSI. The input module has a different range of voltages and the engineering units full scale and low scale are determined as follows: | ||

eng. units full scale = 17.5 × 10 | eng. units full scale = 17.5 × 10 | ||

eng. units low scale = 17.5 × (-10) | eng. units low scale = 17.5 × (-10) | ||

The database entries to convert this pressure will be as follows: | The database entries to convert this pressure will be as follows: | ||

; '''LINR:''' Linear | |||

; '''LINR: ''' | ; '''EGUF:''' 175 | ||

; '''EGUL:''' -175 | |||

; '''EGUF:''' | ; '''EGU:''' PSI | ||

; '''EGUL:''' | |||

; '''EGU:''' | |||

The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | ||

<TABLE> | <TABLE> | ||

<TD ROWSPAN=3>eng units = -175 + ( | <TD ROWSPAN=3>eng units = -175 + ( | ||

| Line 500: | Line 431: | ||

Notice that at low scale the transducer will generate 0 Volts to represent 0 PSI. Because this is half of what the input card accepts, it is input as 2048. Let's plug in the numbers to see the result: | Notice that at low scale the transducer will generate 0 Volts to represent 0 PSI. Because this is half of what the input card accepts, it is input as 2048. Let's plug in the numbers to see the result: | ||

-175 + (2048 / 4095) * (175 - -175) = 0 | -175 + (2048 / 4095) * (175 - -175) = 0 | ||

| Line 508: | Line 438: | ||

Let's consider another variation of this linear conversion where the input card accepts -10 Volts to 10 Volts, the transducer transmits 0 - 2 Volts for 0 - 175 PSI and a 2x amplifier is on the transmitter. | Let's consider another variation of this linear conversion where the input card accepts -10 Volts to 10 Volts, the transducer transmits 0 - 2 Volts for 0 - 175 PSI and a 2x amplifier is on the transmitter. | ||

[[Image:RRM 3-13 Concepts d4.gif]] | [[Image:RRM 3-13 Concepts d4.gif]] | ||

At 0 PSI the transducer transmits 0 Volts. This is amplified to 0 Volts. At half scale, it is read as 2048. At 175 PSI, full scale, the transducer transmits 2 Volts, which is amplified to 4 Volts. The analog input card sees 4 Volts as 70 percent of range or 2867 counts. The engineering units full scale and low scale are determined as follows: | At 0 PSI the transducer transmits 0 Volts. This is amplified to 0 Volts. At half scale, it is read as 2048. At 175 PSI, full scale, the transducer transmits 2 Volts, which is amplified to 4 Volts. The analog input card sees 4 Volts as 70 percent of range or 2867 counts. The engineering units full scale and low scale are determined as follows: | ||

eng units full scale = 43.75 × 10 | eng units full scale = 43.75 × 10 | ||

eng units low scale = 43.75 × (-10) | eng units low scale = 43.75 × (-10) | ||

(175 / 4 = 43.75) The record's field entries to convert this pressure will be as follows: | (175 / 4 = 43.75) The record's field entries to convert this pressure will be as follows: | ||

; '''LINR''' Linear | |||

; '''LINR | ; '''EGUF''' 437.5 | ||

; '''EGUL''' -437.5 | |||

; '''EGUF | ; '''EGU''' PSI | ||

; '''EGUL | |||

; '''EGU | |||

The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | The conversion will also take into account the precision of the I/O module. In this example (assuming a 12 bit analog input card) the conversion is as follows: | ||

<TABLE> | <TABLE> | ||

<TD ROWSPAN=3>eng units = -437.5 + ( | <TD ROWSPAN=3>eng units = -437.5 + ( | ||

| Line 541: | Line 463: | ||

Notice that at low scale the transducer will generate 0 Volts to represent 0 PSI. Because this is half of what the input card accepts, it is input as 2048. Let's plug in the numbers to see the result: | Notice that at low scale the transducer will generate 0 Volts to represent 0 PSI. Because this is half of what the input card accepts, it is input as 2048. Let's plug in the numbers to see the result: | ||

-437.5 + (2048 / 4095) * (437.5 - -437.5) = 0 | -437.5 + (2048 / 4095) * (437.5 - -437.5) = 0 | ||

Notice that at full scale the transducer will generate 2 volts which represents 175 PSI. The amplifier will change the 2 Volts to 4 Volts. 4 Volts is 14/20 or 70 percent of the I/O card's scale. The input from the I/O card is therefore 2866 (i.e., 0.7 * 4095). Let's plug in the numbers to see the result: | Notice that at full scale the transducer will generate 2 volts which represents 175 PSI. The amplifier will change the 2 Volts to 4 Volts. 4 Volts is 14/20 or 70 percent of the I/O card's scale. The input from the I/O card is therefore 2866 (i.e., 0.7 * 4095). Let's plug in the numbers to see the result: | ||

-437.5 + (2866 / 4095) * (437.5 - -437.5) = 175 PSI | -437.5 + (2866 / 4095) * (437.5 - -437.5) = 175 PSI | ||

| Line 552: | Line 472: | ||

=== Breakpoint Conversions === | === Breakpoint Conversions === | ||

Now let us consider a non-linear conversion, | Now let us consider a non-linear conversion. These are conversions that could be entered as polynomials. As these are more time consuming to execute, a break point table is created that breaks the non-linear conversion into linear segments that are accurate enough. | ||

==== Breakpoint Table ==== | |||

The breakpoint table is then used to do a piece-wise linear conversion. Each piecewise segment of the breakpoint table contains:<br> | |||

Raw Value Start for this segment, Engineering Units at the start, Slope of this segment. | |||

For a 12 bit ADC a table may look like this: | |||

0x000, 14.0, .2 | |||

0x7ff, 3500.0, .1 | |||

-1. | |||

Any raw value between 000 and 7ff would be set to 14.0 + .2 * raw value. | |||

Any raw value between 7ff and fff would be set to 3500 + .1 * (raw value - 7ff) | |||

==== Breakpoint Conversion Example ==== | |||

When a new raw value is read, the conversion routine starts from the previous line segment, comparing the raw value start, and either going forward or backward in the table to find the proper segment for this new raw value. Once the proper segment is found, the new engineering units value is the engineering units value at the start of this segment plus the slope of this segment times the position on this segment. A table that has an entry for each possible raw count is effectively a look up table. | |||

In this example the transducer is a thermocouple which transmits 0-20 milliAmps. An amplifier is present which amplifies milliAmps to volts. The I/O card uses a 0-10 Volt interface. | |||

[[Image:RRM 3-13 Concepts d5.gif]] | [[Image:RRM 3-13 Concepts d5.gif]] | ||

The transducer is transmitting temperature. The database entries in the analog input record that are needed to convert this temperature will be as follows: | The transducer is transmitting temperature. The database entries in the analog input record that are needed to convert this temperature will be as follows: | ||

; '''LINR''' typeJdegC | |||

; '''EGUF''' 0 | |||

; '''EGUL''' 0 | |||

; '''EGU''' DGC | |||

For analog records that use breakpoint tables, the EGUF and EGUL fields are not used in the conversion, so they do not have to be given values. | |||

For analog records that use breakpoint tables, the EGUF and EGUL fields are not used in the conversion, so they do not have to be given values. | |||

There are currently lookup tables for J and K thermocouples in degrees F and degrees C. | There are currently lookup tables for J and K thermocouples in degrees F and degrees C. | ||

Other applications for a lookup table are the remainder of the thermocouples, logarithmic output controllers, and exponential transducers. We have chosen the piece-wise linearization of the signals to perform the conversion, as they provide a mechanism for conversion that minimizes the amount of floating point arithmetic required to convert non-linear signals. Additional breakpoint tables can be added to the existing breakpoints. | Other applications for a lookup table are the remainder of the thermocouples, logarithmic output controllers, and exponential transducers. We have chosen the piece-wise linearization of the signals to perform the conversion, as they provide a mechanism for conversion that minimizes the amount of floating point arithmetic required to convert non-linear signals. Additional breakpoint tables can be added to the existing breakpoints. | ||

==== Creating Breakpoint Tables ==== | |||

There are two ways to create a new breakpoint table: | There are two ways to create a new breakpoint table: | ||

1. Simply type in the data for each segment, giving the raw and corresponding engineering unit value for each point in the following format. | 1. Simply type in the data for each segment, giving the raw and corresponding engineering unit value for each point in the following format. | ||

breaktable(<tablename>) { | breaktable(<tablename>) { | ||

<first point> <first eng units> | <first point> <first eng units> | ||

| Line 588: | Line 518: | ||

2. Create a file consisting of a table of an arbitrary number of values in engineering units and use the utility called '''makeBpt''' to convert the table into a breakpoint table. As an example, the contents data file to create the typeJdegC breakpoint table look like this: | 2. Create a file consisting of a table of an arbitrary number of values in engineering units and use the utility called '''makeBpt''' to convert the table into a breakpoint table. As an example, the contents data file to create the typeJdegC breakpoint table look like this: | ||

!header | !header | ||

"typeJdegC" 0 0 700 4095 .5 -210 760 1 | "typeJdegC" 0 0 700 4095 .5 -210 760 1 | ||

| Line 595: | Line 524: | ||

The file must have the extension <code>.data</code>. The file must first have a header specifying these nine things: | The file must have the extension <code>.data</code>. The file must first have a header specifying these nine things: | ||

# Name of breakpoint table in quotes: '''"typeJdegC"''' | # Name of breakpoint table in quotes: '''"typeJdegC"''' | ||

# Engineering units for 1st breakpoint table entry: '''0''' | # Engineering units for 1st breakpoint table entry: '''0''' | ||

| Line 607: | Line 535: | ||

The rest of the file contains lines of equally spaced engineering values, with each line no more than 160 characters before the new-line character. The header and the actual table should be specified by !header and !data, respectively. The file for this data table is called <code>typeJdegC.data</code>, and can be converted to a breakpoint table with the '''makeBpt''' utility as follows: | The rest of the file contains lines of equally spaced engineering values, with each line no more than 160 characters before the new-line character. The header and the actual table should be specified by !header and !data, respectively. The file for this data table is called <code>typeJdegC.data</code>, and can be converted to a breakpoint table with the '''makeBpt''' utility as follows: | ||

unix% makeBpt TypeJdegC.data | unix% makeBpt TypeJdegC.data | ||

= | = Alarm Specification = | ||

There are two elements to an alarm condition: the alarm ''status ''and the ''severity'' of that alarm. Each database record contains its current alarm status and the corresponding severity for that status. The scan task which detects these alarms is also capable of generating a message for each change of alarm state. The types of alarms available fall into these categories: scan alarms, read/write alarms, limit alarms, and state alarms. Some of these alarms are configured by the user, and some are automatic which means that they are called by the record support routines on certain conditions, and cannot be changed or configured by the user. | There are two elements to an alarm condition: the alarm ''status ''and the ''severity'' of that alarm. Each database record contains its current alarm status and the corresponding severity for that status. The scan task which detects these alarms is also capable of generating a message for each change of alarm state. The types of alarms available fall into these categories: scan alarms, read/write alarms, limit alarms, and state alarms. Some of these alarms are configured by the user, and some are automatic which means that they are called by the record support routines on certain conditions, and cannot be changed or configured by the user. | ||

| Line 617: | Line 544: | ||

An alarm ''severity'' is used to give weight to the current alarm status. There are four severities: | An alarm ''severity'' is used to give weight to the current alarm status. There are four severities: | ||

* NO_ALARM | * NO_ALARM | ||

* MINOR | * MINOR | ||

| Line 623: | Line 549: | ||

* INVALID | * INVALID | ||

NO_ALARM means no alarm has been triggered. An alarm state that needs attention but is not dangerous is a MINOR alarm. In this instance the alarm state is meant to give a warning to the operator. A serious state is a MAJOR alarm. In this instance the operator should give immediate attention to the situation and take corrective action. An INVALID alarm means there's a problem with the data, which can be any one of several problems; for instance, a | NO_ALARM means no alarm has been triggered. An alarm state that needs attention but is not dangerous is a MINOR alarm. In this instance the alarm state is meant to give a warning to the operator. A serious state is a MAJOR alarm. In this instance the operator should give immediate attention to the situation and take corrective action. An INVALID alarm means there's a problem with the data, which can be any one of several problems; for instance, a bad address specification, device communication failure, or signal is over range. In these cases, an alarm severity of INVALID is set. An INVALID alarm can point to a simple configuration problem or a serious operational problem. | ||

For limit alarms and state alarms, the severity can be configured by the user to be MAJOR or MINOR for the a specified state. For instance, an analog record can be configured to trigger a MAJOR alarm when its value exceeds 175.0. In addition to the MAJOR and MINOR | For limit alarms and state alarms, the severity can be configured by the user to be MAJOR or MINOR for the a specified state. For instance, an analog record can be configured to trigger a MAJOR alarm when its value exceeds 175.0. In addition to the MAJOR and MINOR severity, the user can choose the NO_ALARM severity, in which case no alarm is generated for that state. | ||

For the other alarm types (i.e., scan, read/write), the severity is always INVALID and not configurable by the user. | For the other alarm types (i.e., scan, read/write), the severity is always INVALID and not configurable by the user. | ||

=== | === Alarm Status === | ||

Alarm status is a field common to all records. The field is defined as an enumerated field. The possible states are listed below. | |||

* NO_ALARM:This record is not in alarm | |||

* READ:An INPUT link failed in the device support | |||

* WRITE:An OUTPUT link failed in the device support | |||

* HIHI:An analog value limit alarm | |||

* HIGH:An analog value limit alarm | |||

* LOLO:An analog value limit alarm | |||

* LOW:An analog value limit alarm | |||

* STATE:An digital value state alarm | |||

* COS:An digital value change of state alarm | |||

* COMM:A device support alarm that indicates the device is not communicating | |||

* TIMEOUT:A device sup alarm that indicates the asynchronous device timed out | |||

* HWLIMIT:A device sup alarm that indicates a hardware limit alarm | |||

* CALC:A record support alarm for calculation records indicating a bad calulation | |||

* SCAN:An invalid SCAN field is entered | |||

* LINK:Soft device support for a link failed:no record, bad field, invalid conversion, INVALID alarm severity on the referenced record. | |||

* SOFT | |||

* BAD_SUB | |||

* UDF | |||

* DISABLE | |||

* SIMM | |||

* READ_ACCESS | |||

* WRITE_ACCESS | |||

There are several problems with this field and menu. | |||

* The maximum enumerated strings passed through channel access is 16 so nothing past SOFT is seen if the value is not requested by Channel Access as a string. | |||

* Only one state can be true at a time so that the root cause of a problem or multiple problems are masked. This is particularly obvious in the interface between the record support and the device support. The hardware could have some combination of problems and there is no way to see this through the interface provided. | |||

* The list is not complete. | |||

In short, the ability to see failures through the STAT field are limited. Most problems in the hardware, configuration, or communication are reduced to READ or WRITE error and have their severity set to INVALID. When you have an INVALID alarm severity, some investigation is currently needed to determine the fault. Most EPICS drivers provide a report routine that dumps a large set of diagnostic information. This is a good place to start in these cases. | |||

=== Alarm Conditions Configured in the Database === | |||

When you have a valid value, there are fields in the record that allow the user to configure off normal conditions. For analog values these are limit alarms. For discrete values, these are state alarms. | |||

=== Limit Alarms === | ==== Limit Alarms ==== | ||

For analog records (this includes such records as the stepper motor record), there are configurable alarm limits. There are two limits for above normal operating range and two limits for the below-limit operating range. Each of these limits has an associated alarm severity which is configured in the database. If the record's value drops below the low limit and an alarm severity of MAJOR was specified for that limit, then a MAJOR alarm is triggered. When the severity of a limit is set to NO_ALARM, none will be generated, even if the limit entered has been violated. | For analog records (this includes such records as the stepper motor record), there are configurable alarm limits. There are two limits for above normal operating range and two limits for the below-limit operating range. Each of these limits has an associated alarm severity which is configured in the database. If the record's value drops below the low limit and an alarm severity of MAJOR was specified for that limit, then a MAJOR alarm is triggered. When the severity of a limit is set to NO_ALARM, none will be generated, even if the limit entered has been violated. | ||

| Line 648: | Line 596: | ||

Analog records also contain a hysteresis field, which is also used when determining limit violations. The hysteresis field is the deadband around the alarm limits. The deadband keeps a signal that is hovering at the limit from generating too many alarms. Let's take an example ([[#Figure 8|''Figure 8'']]) where the range is -100 to 100 volts, the high alarm limit is 30 Volts, and the hysteresis is 10 Volts. If the value is normal and approaches the HIGH alarm limit, an alarm is generated when the value reaches 30 Volts. This will only go to normal if the value drops below the limit by more than the hysteresis. For instance, if the value changes from 30 to 28 this record will remain in HIGH alarm. Only when the value drops to 20 will this record return to normal state. | Analog records also contain a hysteresis field, which is also used when determining limit violations. The hysteresis field is the deadband around the alarm limits. The deadband keeps a signal that is hovering at the limit from generating too many alarms. Let's take an example ([[#Figure 8|''Figure 8'']]) where the range is -100 to 100 volts, the high alarm limit is 30 Volts, and the hysteresis is 10 Volts. If the value is normal and approaches the HIGH alarm limit, an alarm is generated when the value reaches 30 Volts. This will only go to normal if the value drops below the limit by more than the hysteresis. For instance, if the value changes from 30 to 28 this record will remain in HIGH alarm. Only when the value drops to 20 will this record return to normal state. | ||

<H5 ID="Figure_8">Figure 8</H5> | <H5 ID="Figure_8">Figure 8</H5> | ||

[[Image:RRM 3-13 Concepts-8.gif|Figure 8]] | [[Image:RRM 3-13 Concepts-8.gif|Figure 8]] | ||

=== State Alarms === | ==== State Alarms ==== | ||

For discrete values there are configurable state alarms. In this case a user may configure a certain state to be an alarm condition. Let's consider a cooling fan whose discrete states are high, low, and off. The off state can be configured to be an alarm condition so that whenever the fan is off the record is in a STATE alarm. The severity of this error is configured for each state. In this example, the low state could be a STATE alarm of MINOR severity, and the off state a STATE alarm of MAJOR severity. | For discrete values there are configurable state alarms. In this case a user may configure a certain state to be an alarm condition. Let's consider a cooling fan whose discrete states are high, low, and off. The off state can be configured to be an alarm condition so that whenever the fan is off the record is in a STATE alarm. The severity of this error is configured for each state. In this example, the low state could be a STATE alarm of MINOR severity, and the off state a STATE alarm of MAJOR severity. | ||

| Line 668: | Line 615: | ||

= Monitor Specification = | = Monitor Specification = | ||

Channel Access Clients connect to channels to either put, get, or monitor. There are fields in the EPICS records that help limit the monitors posted to these clients through the Channel Access Server. These fields most typically apply when the CA Client is monitoring the VAL field of a record. Most other fields post a monitor whenever they are changed. For instance, a Channel Access put to an alarm limit, causes a monitor to be posted to any client that is monitoring that field. The channel access client can select For more information about using monitors, see the Channel Access Reference Guide. | |||

== Rate Limits == | |||

The inherent rate limit is the rate at which the record is scanned. Monitors are only posted when the record is processed as a minimum. There are currently no mechanisms for the client to rate limit a monitor. If a record is being processed at a much higher rate than an application wants, either the database developer can make a second record at a lower rate and have the client connect to that version of the record or the client can disregard the monitors until the time stamp reflects the change. | |||

== Channel Access Deadband Selection == | |||

The Channel Access client can set a mask to indicate which alarm change it wants to monitor. There are three: value change, archive change, and alarm change. | |||

-- | === Value Change Monitors === | ||

The value change monitors are typically sent whenever a field in the database changes. The VAL field is the exception. If the MDEL field is set, then the VAL field is sent when a monitor is set, and then only sent again, when the VAL field has changed by MDEL. Note that a MDEL of 0 sends a monitor whenever the VAL fields changes and an MDEL of -1 sends a monitor whenever the record is processed as the MDEL is applied to the absolute value of the difference between the previous scan and the current scan. An MDEL of -1 is useful for scalars that are triggered and a positive indication that the trigger occurred is required. | |||

Monitors are a | === Archive Change Monitors === | ||

The archive change monitors are typically sent whenever a field in the database changes. The VAL field is the exception. If the ADEL field is set, then the VAL field is sent when a monitor is set, and then only sent again, when the VAL field has changed by ADEL. | |||

== | === Alarm Change Monitors === | ||

The alarm change monitors are only sent when the alarm severity or status change. As there are filters on the alarm condition checking, the change of alarm status or severity is already filtered through those mechanisms. These are described in [[#Alarm Specification|''Alarm Specification'']]. | |||

== Metadata Changes == | |||

When a Channel Access Clients connects to a field, it typically requests some metadata related to that field. One case is a connection from an operator interface typically requests metadata that includes: display limits, control limits, and display information such as precision and engineering units. If any of the fields in a record that are included in this metadata change after the connection is made, the client is not informed and therefore this is not reflected unless the client disconnects and reconnects. A new flag is being added to the Channel Access Client to support posting a monitor to the client whenever any of this metadata changes. Clients can then request the metadata and reflect the change. Stay tuned for this improvement in the record support and channel access clients. | |||

== Client specific Filtering == | |||

Several situation have come up that would be useful. These include event filtering, rate guarantee, rate limit, and value change. | |||

=== Event Filtering === | |||

There are several cases where a monitor was sent from a channel only when a specific event was true. For instance, there are diagnostics that are read at 1 kHz. A control program may only want this information when the machine is producing a particular beam such as a linac that has several injectors and beam lines. These are virtual machines that want to be notified when the machine is in their mode. These modes can be interleaved at 60 Hz in some cases. A fault analysis tool may only be interested in all of this data when a fault occurs and the beam is dumped. There are two efforts here: one at LANL and one from ANL/BNL. These should be discussed in the near future. | |||

=== Rate Guarantee === | |||

Some clients may want to receive a monitor at a given rate. Binary inputs that only notify on change of state may not post a monitor for a very long time. Some clients may prefer to have a notification at some rate even when the value is not changing. | |||

== | === Rate Limit === | ||

There is a limit to the rate that most clients care to be notified. Currently, only the SCAN period limits this. A user imposed limit is needed in some cases such as a data archiver that would only want this channel at 1 Hz (all channels on the same 1 msec in this case). | |||

=== Value Change === | |||

Different clients may have a need to set different deadbands among them. No specific case is cited. | |||

= Control Specification = | = Control Specification = | ||

| Line 692: | Line 654: | ||

---- | ---- | ||

A control loop is a set of database records used to maintain control autonomously. Each output record has two fields that are help implement this independent control: the desired output location field (DOL) and the output mode select field (OMSL). The OMSL field has two mode choices: <code>closed_loop or supervisory</code>. When the closed loop mode is chosen, the desired output is retrieved from the location specified by the DOL field and placed into the VAL field. When the supervisory mode is chosen, the desired output value is the VAL field. In supervisory mode the DOL link is not retrieved. In the supervisory mode, VAL is set typically by the operator through a Channel Access "Put". | |||

== Closing an Analog Control Loop == | == Closing an Analog Control Loop == | ||

In a simple control loop an analog input record reads the value of a process variable or PV. Then, a PID record retrieves the value from the analog input record | In a simple control loop an analog input record reads the value of a process variable or PV. The operator sets the Setpoint in the PID record. Then, a PID record retrieves the value from the analog input record and computes the error - the difference between the readback and the setpoint. The PID record computes the new output setting to move the process variable toward the setpoint. The analog output record gets the value from the PID through the DOL when the OMSL is closed_loop. It sets the new output and on the next period repeats this process. | ||

== Configuring an Interlock == | |||

When certain conditions become true in the process, it may trip an interlock. The result of this interlock is to move something into a safe state or to mitigate damage by taking some action. One example is the closing of a vacuum valve to isolate a vacuum loss. When a vacuum reading in one region of a machine is not at the operating range, an interlock is used to either close a valve and prohibit it from being open. This can be implemented by reading several vacuum gauges in an area into a calculation record. The expression in the calculation record can express the condition that permits the valve to open. The result of the expression is then referenced to the DOL field of a binary output record that controls the valve. If the binary output has the OMSL field set to closed_loop it sets the valve to the value of the calculation record. If it is set to supervisory, the operator can override the interlock and control the valve directly. | |||

Latest revision as of 15:03, 29 July 2014

Database Concepts

This chapter covers the general functionality that is found in all database records. The topics covered are I/O scanning, I/O address specification, data conversions, alarms, database monitoring, and continuous control:

- Scanning Specification describes the various conditions under which a record is processed.

- Address Specification explains the source of inputs and the destination of outputs.

- Conversion Specification covers data conversions from transducer interfaces to engineering units.

- Alarm Specification presents the many alarm detection mechanisms available in the database.

- Monitor Specification details the mechanism which notifies operators about database value changes.

- Control Specification explains the features available for achieving continuous control in the database.

These concepts are essential in order to understand how the database interfaces with the process.

The EPICS databases can be created using visual tools (VDCT, CapFast) or by manual creation of a database "myDatabase.db" text file. Visual Database Configuration Tool (VDCT), a java application from Cosylab, is a more modern tool for database creation/editing that runs on Linux, Windows, and Sun.

Scanning Specification

Scanning determines when a record is processed. A record is processed when it processes its data and performs any actions related to that data. For example, when an output record is processed, it fetches the value which it is to output, converts the value, and then writes that value to the specified location. Each record must specify the scanning method that determines when it will be processed. There are three scanning methods for database records: (1) periodic, (2) event, and (3) passive.

- Periodic scanning occurs on set time intervals.

- Event scanning occurs on either an I/O interrupt event or a user-defined event.

- Passive scanning occurs when the records linked to the passive record are scanned, or when a value is "put" into a passive record through the database access routines.

For periodic or event scanning, the user can also control the order in which a set of records is processed by using the PHASE mechanism. For event scanning, the user can control the priority at which a record will process. In addition to the scan and the phase mechanisms, there are data links and forward processing links that can be used to cause processing in other records. This section explains these concepts.

Periodic Scanning

The periodic scan tasks run as close to the frequency specified as possible. When each periodic scan task starts, it calls the gettime routine, then processes all of the records on this period. After the processing, gettime is called again and this thread sleeps the difference between the scan period and the time to process the records. If the 1 second scan records take 100 milliseconds to process, then the 1 second scan period will start again 900 milliseconds after completion. The following periods for scanning database records are available, though EPICS can be configured to recognize more scan periods:

10 second5 second2 second1 second.5 second.2 second.1 second

The period that best fits the nature of the signal should be specified. A five-second interval is adequate for the temperature of a mass of water because it does not change rapidly. However, some power levels may change very rapidly, so they need to be scanned every 0.5 seconds. In the case of a continuous control loop, where the process variable being controlled can change quickly, the 0.1 second interval may be the best choice.

For a record to scan periodically, a valid choice must be entered in its SCAN field. Actually, the available choices depend on the configuration of the menuScan.dbd file. As with most other fields which consists of a menu of choices, the choices available for the SCAN field can be changed by editing the appropriate .dbd (database definition) file. dbd files are ASCII files that are used to generate header files that are, in turn, are used to compile the database code. Many dbd files can be used to configure other things besides the choices of menu fields.

Here is an example of a menuScan.dbd file, which has the default menu choices for all periods listed above as well as choices for event scanning, passive scanning, and I/O interrupt scanning:

menu(menuScan) {

choice(menuScanPassive,"Passive")

choice(menuScanEvent,"Event")

choice(menuScanI_O_Intr,"I/O Intr")

choice(menuScan10_second,"10 second")

choice(menuScan5_second,"5 second")

choice(menuScan2_second,"2 second")

choice(menuScan1_second,"1 second")

choice(menuScan_5_second,".5 second")

choice(menuScan_2_second,".2 second")

choice(menuScan_1_second,".1 second")

}

The first three choices must appear first and in the order shown. The remaining definitions are for the periodic scan rates, which must appear in the order slowest to fastest (the order directly controls the thread priority assigned to the particular scan rate, and faster scan rates should be assigned higher thread priorities). At IOC initialization, the menu choice strings are read at scan initialization. The number of periodic scan rates and the period of each rate is determined from the menu choice strings. Thus periodic scan rates can be changed by changing menuScan.dbd and loading this version via dbLoadDatabase. The only requirement is that each periodic choice string must begin with a numeric value specified in units of seconds.For example, to add a choice for 0.015 seconds, add the following line after the 0.1 second choice:

choice(menuScan_015_second, " .015 second")

The range of values for scan periods can be from one clock tick. (vxWorks out of the box supports 0.015 seconds or a maximum period of 60 Hz), to the maximum number of ticks available on the system. Note, however, that the order of the choices is essential. The first three choices must appear in the above order. Then the remaining choices should follow in descending order, the biggest time period first and the smallest last.

Event Scanning

There are two types of events supported in the input/output controller (IOC) database, the I/O interrupt event and the user-defined event. For each type of event, the user can specify the scheduling priority of the event using the PRIO or priority field. The scheduling priority refers to the priority the event has on the stack relative to other running tasks. There are three possible choices: LOW, MEDIUM, or HIGH. A low priority event has a priority a little higher than Channel Access. A medium priority event has a priority about equal to the median of periodic scanning tasks. A high priority event has a priority equal to the event scanning task.

I/O Interrupt Events

Scanning on I/O interrupt causes a record to be processed when a driver posts an I/O Event. In many cases these events are posted in the interrupt service routine. For example, if an analog input record gets its value from a Xycom 566 Differential Latched card and it specifies I/O interrupt as its scanning routine, then the record will be processed each time the card generates an interrupt (not all types of I/O cards can generate interrupts). Note that even though some cards cannot actually generate interrupts, some driver support modules can simulate interrupts. In order for a record to scan on I/O interrupts, its SCAN field must specify I/O Intr.

User-defined Events

The user-defined event mechanism processes records that are meaningful only under specific circumstances. User-defined events can be generated by the post_event() database access routine. Two records, the event record and the timer record, are also used to post events. For example, there is the timing output, generated when the process is in a state where a control can be safely changed. Timing outputs are controlled through Timer records, which have the ability to generate interrupts. Consider a case where the timer record is scanned on I/O interrupt and the timer record's event field (EVNT) contains an event number. When the record is scanned, the user-defined event will be posted. When the event is posted, all records will be processed whose SCAN field specifies event and whose event number is the same as the generated event. User-defined events can also be generated through software. Event numbers are configurable and should be controlled through the project engineer. They only need to be unique per IOC because they only trigger processing for records in the same IOC.

All records that use the user-defined event mechanism must specify Event in their SCAN field and an event number in their EVNT field.

Passive Scanning

Passive records are processed when the are referenced by other records through their link fields or when a channel access put is done to them.

Channel Access Puts to Passive Scanned Records

In this case where a channel access put is done to a record, the field being written has an attribute that determines if this put causes record processing. In the case of all records, putting to the VAL field causes record processing. Consider a binary output that has a SCAN of Passive. If an operator display has a button on the VAL field, every time the button is pressed, a channel access put is sent to the record. When the VAL field is written, the Passive record is processed and the specified device support is called to write the newly converted RVAL to the device specified in the OUT field through the device support specified by DTYP. Fields determined to change the way a record behaves, typical cause the record to process. Another field that would cause the binary output to process would be the ZSV; which is the alarm severity if the binary output record is in state Zero (0). If the record was in state 0 and the severity of being in that state changed from No Alarm to Minor Alarm, the only way to catch this on a SCAN Passive record is to process it. Fields are configured to cause binary output records to process in the bo.dbd file. The ZSV severity is configured as follows:

- field(ZSV,DBF_MENU) {

- prompt("Zero Error Severity")

- promptgroup(GUI_ALARMS)

- pp(TRUE)

- interest(1)

- menu(menuAlarmSevr)

- prompt("Zero Error Severity")

- }

where the line "pp(TRUE)" is the indication that this record is processed when a channel access put is done.

Database Links to Passive Record

The records in the process database use link fields to configure data passing and scheduling (or processing). These fields are either INLINK, OUTLINK, or FWDLINK fields.

Forward Links

In the database definition file (.dbd) these fields are defined as follows:

- field(FLNK,DBF_FWDLINK) {

- prompt("Forward Process Link")

- promptgroup(GUI_LINKS)

- interest(1)

- prompt("Forward Process Link")

- }

If the record that is referenced by the FLNK field has a SCAN field of Passive, then the record is processed after the record with the FLNK. The FLNK field only causes record processing, no data is passed. In (Figure 1), three records are shown. The ai record "Input_2" is processed periodically. At each interval, Input_2 is processed. After Input_2 has read the new input, converted it to engineering units, checked the alarm condition, and posted monitors to Channel Access, then the calc record "Calculation_2" is processed. Calculation_2 reads the input, performs the calculation, checked the alarm condition, and posted monitors to Channel Access, then the ao record "Output_2" is processed. Output_2 reads the desired output, rate limits it, clamps the range, calls the device support for the OUT field, checks alarms, posts monitors and then is complete.

Figure 1

Input Links